By Bill Hagerstrand, Director, Security Business, Marvell

It’s time for more security coffee talk with Bill! I was first exposed to the term “Confidential Compute” back in 2016 when I started reading about this new radical technology from Intel. Intel first introduced us to this new idea back in 2015, with their 6th generation of CPUs called Skylake. The technology was named SGX (Software Guard Extensions), basically a set of instruction codes that allowed user-level or OS code to define a trusted execution environment (TEE) already built into Intel CPUs. The CPU would encrypt a portion of memory, called the enclave, and any data or code running in the enclave would be decrypted, in real-time or runtime, within the CPU. This provided added protections against any read access by other code running on the same system.

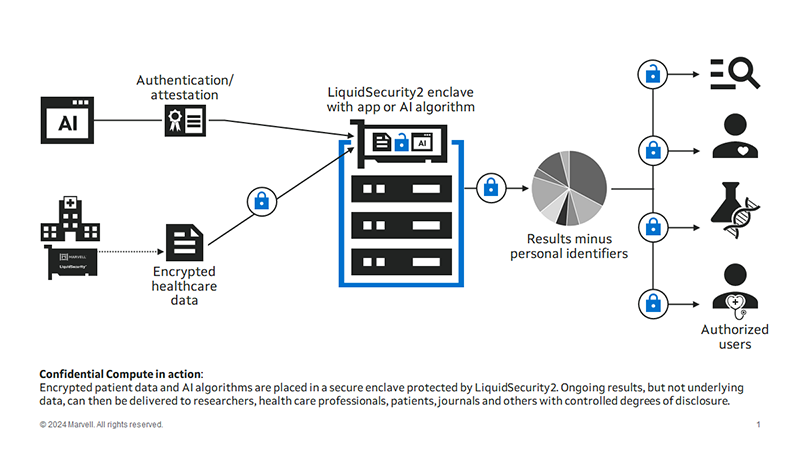

The idea was to protect data in the elusive “data in use” phase. There are three stages of data: at rest, in motion or in use. Data at-rest can be encrypted with self-encrypting hard drives—pretty much standard these days—and most databases already support encryption. Data in-motion is already encrypted by TLS/SSL/HTTPS/IPSec encryption and authentication protocols.

Data in use, however, is a fundamentally different challenge: for any application to make “use” of this data, it must be decrypted. Intel solved this by creating a TEE within the CPU that allows the application and unencrypted data to run securely and privately from any user or code running on the same computer. AMD provides a similar technology it calls AMD SEV (Secure Encrypted Virtualization). ARM, meanwhile, calls its solution Arm CCA (Confidential Compute Architecture). Metaphorically, a TEE is like the scene in courtroom dramas where the judge and the attorneys debate the admissibility of evidence in a private room. Decisions are made in private; the outside world gets to see the result, but not the reasoning, and if there’s a mistake, the underlying materials can still be examined later.

By Bill Hagerstrand, Security Solutions BU, Marvell

Time to grab a cup of coffee, as I describe how the transition towards open, disaggregated, and virtualized networks – also known as cloud-native 5G – has created new challenges in an already-heightened 4G-5G security environment.

5G networks move, process and store an ever-increasing amount of sensitive data as a result of faster connection speeds, mission-critical nature of new enterprise, industrial and edge computing/AI applications, and the proliferation of 5G-connected IoT devices and data centers. At the same time, evolving architectures are creating new security threat vectors. The opening of the 5G network edge is driven by O-RAN standards, which disaggregates the radio units (RU), front-haul, mid-haul, and distributed units (DU). Virtualization of the 5G network further disaggregates hardware and software and introduces commodity servers with open-source software running in virtual machines (VM’s) or containers from the DU to the core network.

As a result, these factors have necessitated improvements in 5G security standards that include additional protocols and new security features. But these measures alone, are not enough to secure the 5G network in the cloud-native and quantum computing era. This blog details the growing need for cloud-optimized HSMs (Hardware Security Modules) and their many critical 5G use cases from the device to the core network.

Copyright © 2025 Marvell, All rights reserved.