By Michael Kanellos, Head of Influencer Relations, Marvell

This story was also featured in Electronic Design

Some technologies experience stunning breakthroughs every year. In memory, it can be decades between major milestones. Burroughs invented magnetic memory in 1952 so ENIAC wouldn’t lose time pulling data from punch cards1. In the 1970s DRAM replaced magnetic memory while in the 2010s, HBM arrived.

Compute Express Link (CXL) represents the next big step forward. CXL devices essentially take advantage of available PCIe interfaces to open an additional conduit that complements the overtaxed memory bus. More lanes, more data movement, more performance.

Additionally, and arguably more importantly, CXL will change how data centers are built, operate and work. It’s a technology that will have a ripple effect. Here are a few scenarios on how it can potentially impact infrastructure:

1. DLRM Gets Faster and More Efficient

Memory bandwidth—the amount of memory that can be transmitted from memory to a processor per second—has chronically been a bottleneck because processor performance increases far faster and more predictably than bus speed or bus capacity. To help contain that gap, designers have added more lanes or added co-processors.

Marvell® StructeraTM A does both. The first-of-its-kind device in a new industry category of memory accelerators, Structera A sports 16 Arm Neoverse N2 cores, 200 Gbps of memory bandwidth, up to 4TB of memory and consumes under 100 watts along with processing fabric and other Marvell-only technology. It’s essentially a server-within-a-server with outsized memory bandwidth for bandwidth-intensive tasks like inference or deep learning recommendation models (DRLM). Cloud providers need to program their software to offload tasks to Structera A, but doing so brings a number of benefits.

Take a high-end x86 processor. Today it might sport 64 cores, 400 Gbps of memory bandwidth, up to 2TB of memory (i.e. four top-of-the-line 512GB DIMMs), and consume a maximum 400 watts for a data transmission power rate 1W per GB/sec.

By Nicola Bramante, Senior Principal Engineer

Transimpedance amplifiers (TIAs) are one of the unsung heroes of the cloud and AI era.

At the recent OFC 2025 event in San Francisco, exhibitors demonstrated the latest progress on 1.6T optical modules featuring Marvell 200G TIAs. Recognized by multiple hyperscalers for its superior performance, Marvell 200G TIAs are becoming a standard component in 200G/lane optical modules for 1.6T deployments.

TIAs capture incoming optical signals from light detectors and transform the underlying data to be transmitted between and used by servers and processors in data centers and scale-up and scale-out networks. Put another way, TIAs allow data to travel from photons to electrons. TIAs also amplify the signals for optical digital signal processors, which filter out noise and preserve signal integrity.

And they are pervasive. Virtually every data link inside a data center longer than three meters includes an optical module (and hence a TIA) at each end. TIAs are critical components of fully retimed optics (FRO), transmit retimed optics (TRO) and linear pluggable optics (LPO), enabling scale-up servers with hundreds of XPUs, active optical cables (AOC), and other emerging technologies, including co-packaged optics (CPO), where TIAs are integrated into optical engines that can sit on the same substrates where switch or XPU ASICs are mounted. TIAs are also essential for long-distance ZR/ZR+ interconnects, which have become the leading solution for connecting data centers and telecom infrastructure. Overall, TIAs are a must have component for any optical interconnect solution and the market for interconnects is expected to triple to $11.5 billion by 2030, according to LightCounting.

By Michael Kanellos, Head of Influencer Relations, Marvell

Bigger is better, right? Look at AI: the story swirls with superlatives.

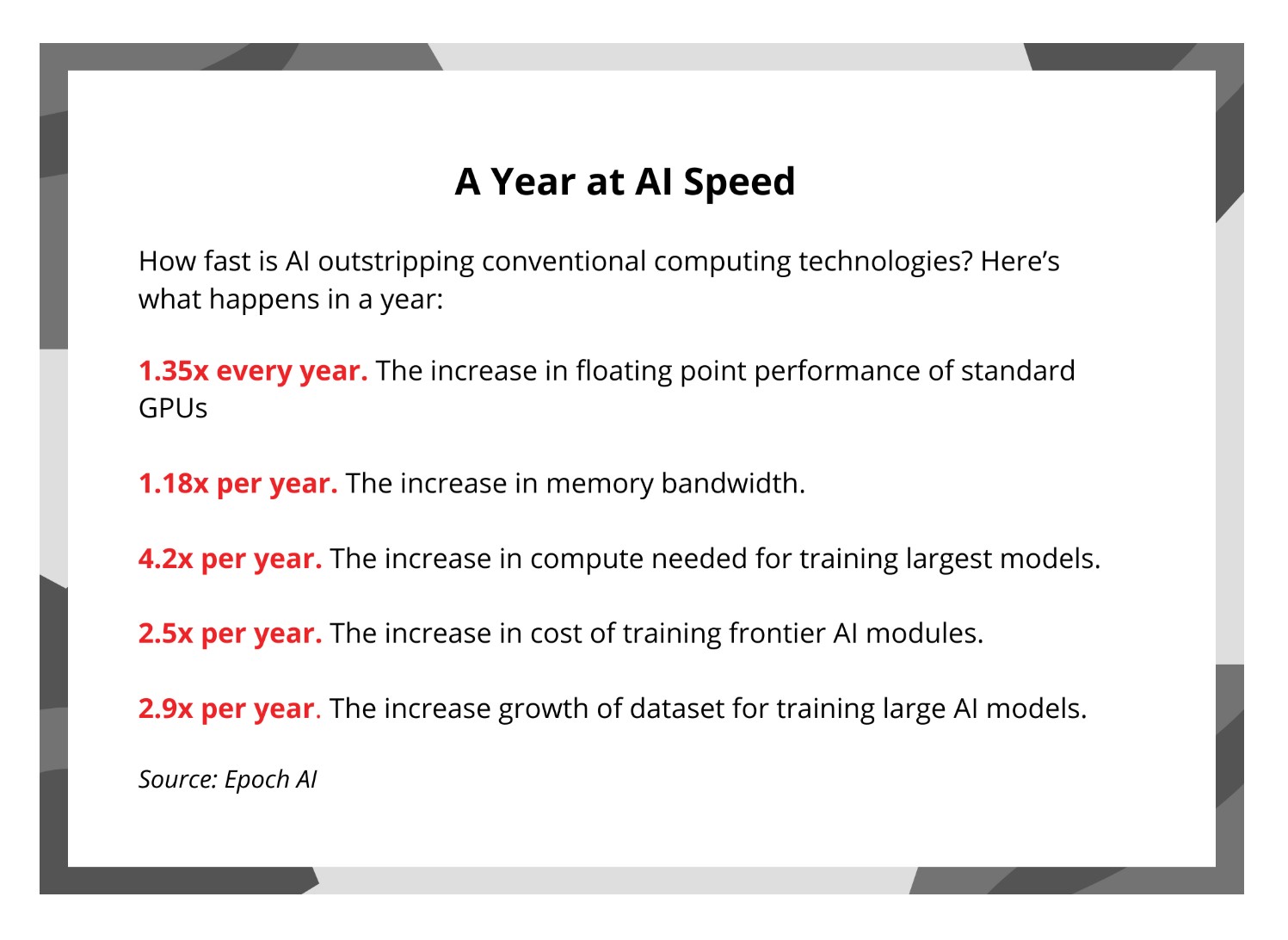

ChatGPT landed one million users within five days,1 far surpassing the pace of any previous technology. The compute requirements of training notable AI models increases 4.5x per year while training data sets mushroom by 3x per year,2 etc.

Bigger, however, comes at a price. Data center power consumption threatens to nearly triple by 2028 primarily because of AI3. Water withdrawals, meanwhile, are escalating as well: by 2027, AI data centers could need up to 6.6 billion cubic meters, or about half of what the UK uses.4 The economic and environmental toll over the long run may not be sustainable.

Conceptually it is easier to understand how larger models translate into a "better and more capable" model. The more layers or parameters the models have, contribute to the quality and accuracy of the model. Yet, can we sustain that extracted value at the same cadence by continuing the size increase? Or will the curve start to plateau at some point?

By Nick Kucharewski, Senior Vice President and General Manager, Cloud Platform Business Unit, Marvell

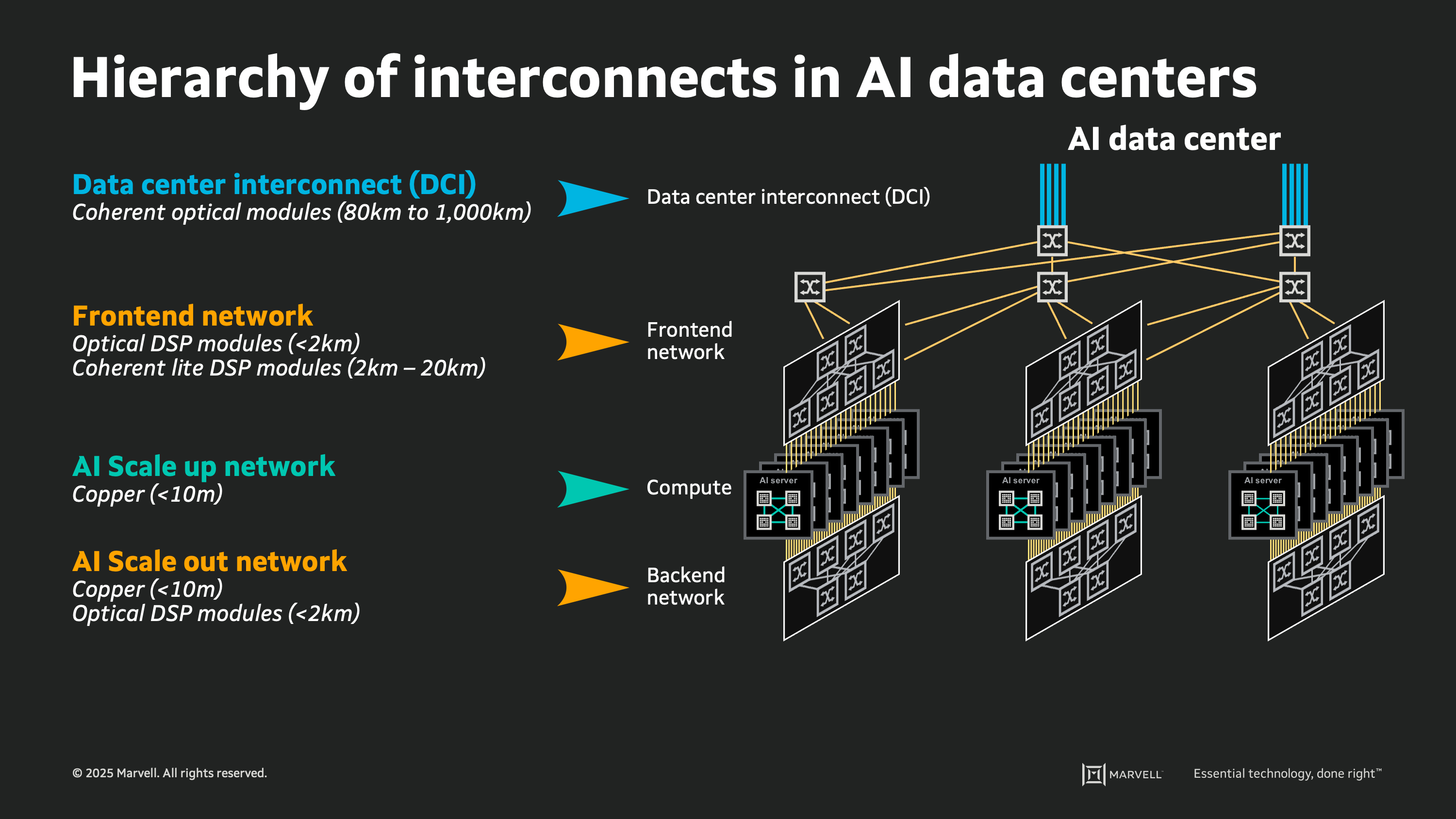

The rapid expansion in the size and capacity of AI workloads is significantly impacting both computing and network technologies in the modern data center. Data centers are continuously evolving to accommodate higher performance GPUs and AI accelerators (XPUs), increased memory capacities, and a push towards lower latency architectures for arranging these elements. The desire for larger clusters with shorter compute times has driven heightened focus on networking interconnects, with designers embracing state-of-the-art technologies to ensure efficient data movement and communication between the components comprising the AI cloud.

A large-scale AI cloud data center can contain hundreds of thousands, or millions, of individual links between the devices performing compute, switching, and storage functions. Inside the cloud, there is a tightly ordered fabric of high-speed interconnects: webs of copper wire and glass fiber each carrying digital signals at roughly 100 billion bits per second. Upon close inspection there is a pattern and a logical ordering to each link used for every connection in the cloud, which can be analyzed by considering the physical attributes of different types of links.

For inspiration, we can look back 80 years to the origins of modern computing, when John von Neumann posed the concept of memory hierarchy for computer architectures1. In 1945 Von Neumann proposed a smaller faster storage memory placed close to the compute circuitry, and a larger slower storage medium placed further away, to enable a system delivering both performance and scale. This concept of memory hierarchy is now pervasive, with the terms “Cache”, “DRAM”, and “Flash” part of our everyday language. In today’s AI cloud data centers, we can analyze the hierarchy of interconnects in much the same way. It is a layered structure of links, strategically utilized according to their innate physical attributes of speed, power consumption, reach, and cost.

The hierarchy of interconnects

This hierarchy of interconnects provides a framework for understanding emerging interconnect technologies and to assess their potential impact in the next generation of AI data centers. Through a discussion of the basic attributes of emerging interconnect technologies in the context of the goals and aims of the AI cloud design, we can estimate how these technologies may be deployed in the coming years. By identifying the desired attributes for each use case, and the key design constraints, we can also predict when new technologies will pass the "tipping point" enabling widespread adoption in future cloud deployments.

Marvell has once again been honored with a variety of Lightwave+BTR Innovation Reviews high scores. A panel of experts from the optical communications community recognized five products from Marvell’s portfolio as among the best in the industry.

“On behalf of the Lightwave+BTR Innovation Reviews, I would like to congratulate Marvell on again achieving a well-deserved honoree status for multiple products,” said Lightwave+BTR Editor-in-Chief Sean Buckley. “This is a very competitive program allowing us to showcase and applaud the most innovative products that significantly impact the industry. It says a lot that Marvell is consistently recognized by these experts for its innovation.”