VMware vSAN ReadyNode Recipes Can Use Substitutions

When you are baking a cake, at times you substitute in different ingredients to make the result better. The same can be done with VMware vSAN ReadyNode configurations or recipes. Some changes to the documented configurations can make the end solution much more flexible and scalable.

VMware allows certain elements within a vSAN ReadyNode bill of materials (BOM) to be substituted. In this

VMware BLOG, the author outlines that server elements in the bom can change including:

- CPU

- Memory

- Caching Tier

- Capacity Tier

- NIC

- Boot Device

However, changes can only be made with devices that are certified as supported by VMware. The list of certified I/O devices can be found on

VMware vSAN Compatibility Guide and the full portfolio of NICs, FlexFabric Adapters and Converged Network Adapters form HPE and Cavium are supported.

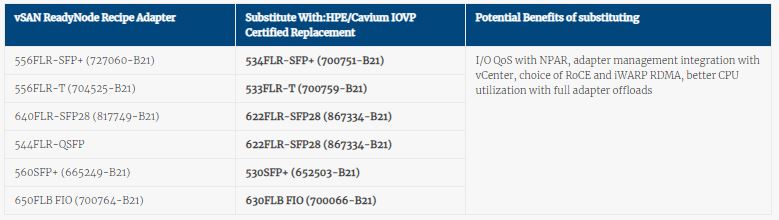

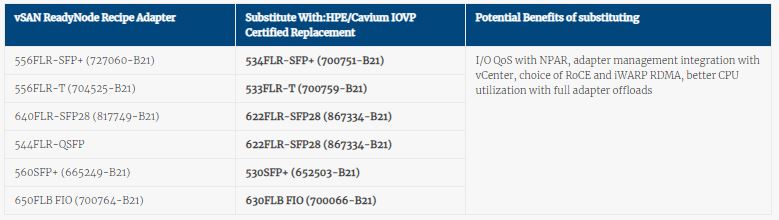

If we zero in on the HPE recipes for vSAN ReadyNode configurations, here are the substitutions you can make for I/O adapters.

Ok, so we know what substitutions we can make in these vSAN storage solutions. What are the benefits to the customer for making this change?

There are several benefits to the HPE/Cavium technology compared to the other adapter offerings.

- HPE 520/620 Series adapters support Universal RDMA – the ability to support both RoCE and IWARP RDMA protocols with the same adapter.

- Why Does This Matter? Universal RDMA offers flexibility in choice when low-latency is a requirement. RoCE works great if customers have already deployed using lossless Ethernet infrastructure. iWARP is a great choice for greenfield environments as it works on existing networks, doesn’t require complexity of lossless Ethernet and thus scales infinitely better.

- Concurrent Network Partitioning (NPAR) and SR-IOV

- NPAR (Network Partitioning) allows for virtualization of the physical adapter port. SR-IOV Offloadmove management of the VM network from the Hypervisor (CPU) to the Adapter. With HPE/Cavium adapters, these two technologies can work together to optimize the connectivity for virtual server environments and offload the Hypervisor (and thus CPU) from managing VM traffic, while providing full Quality of Service at the same time.

- Storage Offload

- Ability to reduce CPU utilization by offering iSCSI or FCoE Storage offload on the adapter itself. The freed-up CPU resources can then be used for other, more critical tasks and applications. This also reduces the need for dedicated storage adapters, connectivity hardware and switches, lowering overall TCO for storage connectivity.

- Offloads in general – In addition to RDMA, Storage and SR-IOV Offloads mentioned above, HPE/Cavium Ethernet adapters also support TCP/IP Stateless Offloads and DPDK small packet acceleration offloads as well. Each of these offloads moves work from the CPU to the adapter, reducing the CPU utilization associated with I/O activity. As mentioned in my previous blog, because these offloads bypass tasks in the O/S Kernel, they also mitigate any performance issues associated with Spectre/Meltdown vulnerability fixes on X86 systems.

- Adapter Management integration with vCenter – All HPE/Cavium Ethernet adapters are managed by Cavium’s QCC utility which can be fully integrated into VMware v-Center. This provides a much simpler approach to I/O management in vSAN configurations.

In summary, if you are looking to deploy vSAN ReadyNode, you might want to fit in a substitution or two on the I/O front to take advantage of all the intelligent capabilities available in Ethernet I/O adapters from HPE/Cavium. Sure, the standard ingredients work, but the right substitution will make things more flexible, scalable and deliver an overall better experience for your client.

Ok, so we know what substitutions we can make in these vSAN storage solutions. What are the benefits to the customer for making this change?

There are several benefits to the HPE/Cavium technology compared to the other adapter offerings.

Ok, so we know what substitutions we can make in these vSAN storage solutions. What are the benefits to the customer for making this change?

There are several benefits to the HPE/Cavium technology compared to the other adapter offerings.