By Michael Kanellos, Head of Influencer Relations, Marvell

This story was also featured in Electronic Design

Some technologies experience stunning breakthroughs every year. In memory, it can be decades between major milestones. Burroughs invented magnetic memory in 1952 so ENIAC wouldn’t lose time pulling data from punch cards1. In the 1970s DRAM replaced magnetic memory while in the 2010s, HBM arrived.

Compute Express Link (CXL) represents the next big step forward. CXL devices essentially take advantage of available PCIe interfaces to open an additional conduit that complements the overtaxed memory bus. More lanes, more data movement, more performance.

Additionally, and arguably more importantly, CXL will change how data centers are built, operate and work. It’s a technology that will have a ripple effect. Here are a few scenarios on how it can potentially impact infrastructure:

1. DLRM Gets Faster and More Efficient

Memory bandwidth—the amount of memory that can be transmitted from memory to a processor per second—has chronically been a bottleneck because processor performance increases far faster and more predictably than bus speed or bus capacity. To help contain that gap, designers have added more lanes or added co-processors.

Marvell® StructeraTM A does both. The first-of-its-kind device in a new industry category of memory accelerators, Structera A sports 16 Arm Neoverse N2 cores, 200 Gbps of memory bandwidth, up to 4TB of memory and consumes under 100 watts along with processing fabric and other Marvell-only technology. It’s essentially a server-within-a-server with outsized memory bandwidth for bandwidth-intensive tasks like inference or deep learning recommendation models (DRLM). Cloud providers need to program their software to offload tasks to Structera A, but doing so brings a number of benefits.

Take a high-end x86 processor. Today it might sport 64 cores, 400 Gbps of memory bandwidth, up to 2TB of memory (i.e. four top-of-the-line 512GB DIMMs), and consume a maximum 400 watts for a data transmission power rate 1W per GB/sec.

By Michael Kanellos, Head of Influencer Relations, Marvell

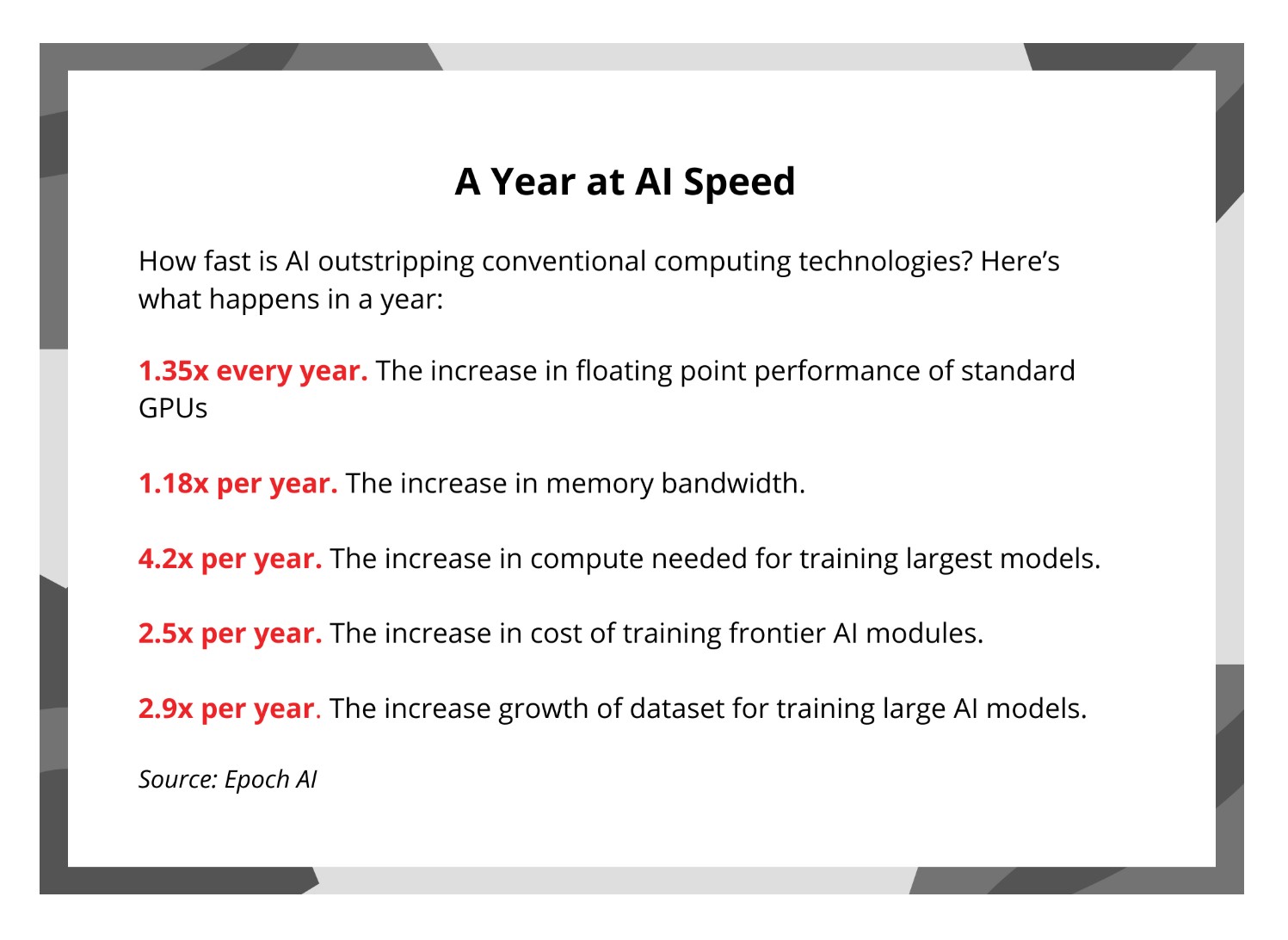

Bigger is better, right? Look at AI: the story swirls with superlatives.

ChatGPT landed one million users within five days,1 far surpassing the pace of any previous technology. The compute requirements of training notable AI models increases 4.5x per year while training data sets mushroom by 3x per year,2 etc.

Bigger, however, comes at a price. Data center power consumption threatens to nearly triple by 2028 primarily because of AI3. Water withdrawals, meanwhile, are escalating as well: by 2027, AI data centers could need up to 6.6 billion cubic meters, or about half of what the UK uses.4 The economic and environmental toll over the long run may not be sustainable.

Conceptually it is easier to understand how larger models translate into a "better and more capable" model. The more layers or parameters the models have, contribute to the quality and accuracy of the model. Yet, can we sustain that extracted value at the same cadence by continuing the size increase? Or will the curve start to plateau at some point?

Here at Marvell, we talk frequently to our customers and end users about I/O technology and connectivity. This includes presentations on I/O connectivity at various industry events and delivering training to our OEMs and their channel partners. Often, when discussing the latest innovations in Fibre Channel, audience questions will center around how relevant Fibre Channel (FC) technology is in today’s enterprise data center. This is understandable as there are many in the industry who have been proclaiming the demise of Fibre Channel for several years. However, these claims are often very misguided due to a lack of understanding about the key attributes of FC technology that continue to make it the gold standard for use in mission-critical application environments.

From inception several decades ago, and still today, FC technology is designed to do one thing, and one thing only: provide secure, high-performance, and high-reliability server-to-storage connectivity. While the Fibre Channel industry is made up of a select few vendors, the industry has continued to invest and innovate around how FC products are designed and deployed. This isn’t just limited to doubling bandwidth every couple of years but also includes innovations that improve reliability, manageability, and security.

By Kristin Hehir, Senior Manager, PR and Marketing, Marvell

The sheer volume of data traffic moving across networks daily is mind-boggling almost any way you look at it. During the past decade, global internet traffic grew by approximately 20x, according to the International Energy Agency. One contributing factor to this growth is the popularity of mobile devices and applications: Smartphone users spend an average of 5 hours a day, or nearly 1/3 of their time awake, on their devices, up from three hours just a few years ago. The result is incredible amounts of data in the cloud that need to be processed and moved. Around 70% of data traffic is east-west traffic, or the data traffic inside data centers. Generative AI, and the exponential growth in the size of data sets needed to feed AI, will invariably continue to push the curb upward.

Yet, for more than a decade, total power consumption has stayed relatively flat thanks to innovations in storage, processing, networking and optical technology for data infrastructure. The debut of PAM4 digital signal processors (DSPs) for accelerating traffic inside data centers and coherent DSPs for pluggable modules have played a large, but often quiet, role in paving the way for growth while reducing cost and power per bit.

Marvell at ECOC 2023

At Marvell, we’ve been gratified to see these technologies get more attention. At the recent European Conference on Optical Communication, Dr. Loi Nguyen, EVP and GM of Optical at Marvell, talked with Lightwave editor in chief, Sean Buckley, on how Marvell 800 Gbps and 1.6 Tbps technologies will enable AI to scale.

By Dr. Radha Nagarajan, Senior Vice President and Chief Technology Officer, Optical and Cloud Connectivity Group, Marvell

This article was originally published in Data Center Knowledge

People or servers?

Communities around the world are debating this question as they try to balance the plans of service providers and the concerns of residents.

Last year, the Greater London Authority told real estate developers that new housing projects in West London may not be able to go forward until 2035 because data centers have taken all of the excess grid capacity1. EirGrid2 said it won’t accept new data center applications until 2028. Beijing3 and Amsterdam have placed strict limits on new facilities. Cities in the southwest and elsewhere4, meanwhile, are increasingly worried about water consumption as mega-sized data centers can use over 1 million gallons a day5.

When you add in the additional computing cycles needed for AI and applications like ChatGPT, the outline of the conflict becomes more heated.

On the other hand, we know we can’t live without them. Modern society, with remote work, digital streaming and modern communications all depend on data centers. Data centers are also one of sustainability’s biggest success stories. Although workloads grew by approximately 10x in the last decade with the rise of SaaS and streaming, total power consumption stayed almost flat at around 1% to 1.5%6 of worldwide electricity thanks to technology advances, workload consolidation, and new facility designs. Try and name another industry that increased output by 10x with a relatively fixed energy diet?